Over 18 years in infrastructure engineering, I’ve repeatedly faced the challenge of designing systems that don’t just scale—but remain available through any failure. In this article, I share my perspective on load balancing—not as a collection of tools, but as a core architectural discipline. We’ll compare bare metal and Kubernetes approaches, explore when to choose HAProxy over NGINX, and dive deep into building truly fault-tolerant systems.

The Foundation: Layer 4 vs. Layer 7

Every solution starts with choosing an OSI layer. Layer 4 (TCP/UDP) load balancing operates on network and transport headers. It’s fast, simple, and ideal for non-HTTP protocols: PostgreSQL, Redis, gRPC, or VoIP. HAProxy, with its event-driven architecture, often delivers superior throughput and lower latency here.

Layer 7 (HTTP/HTTPS), by contrast, inspects request content—URLs, headers, methods. This enables powerful features: domain- or path-based routing, TLS termination, session persistence, and WAF integration. But this flexibility comes at a cost: higher resource consumption. The choice between layers is always a trade-off between performance and functionality.

Architectural Paradigms: Bare Metal vs. Kubernetes

On bare metal, high availability is built around an “active” load balancer. I prefer the classic HAProxy + Keepalived pairing: Keepalived uses VRRP to manage a floating VIP, while HAProxy on the active node distributes traffic to backends. This setup is predictable, stable, and independent of complex orchestrators—making it ideal for mission-critical services, including even the Kubernetes control plane itself.

Kubernetes, however, abstracts failure handling at the application layer. The Service object handles backend health via self-healing, while internal traffic is managed by kube-proxy. For external HTTP traffic, Ingress controllers (often HAProxy- or NGINX-based) are used; for TCP/UDP, LoadBalancer-type Services. On bare metal, this requires MetalLB or Cilium—adding complexity. Kubernetes doesn’t protect the load balancer itself; it guarantees healthy service instances.

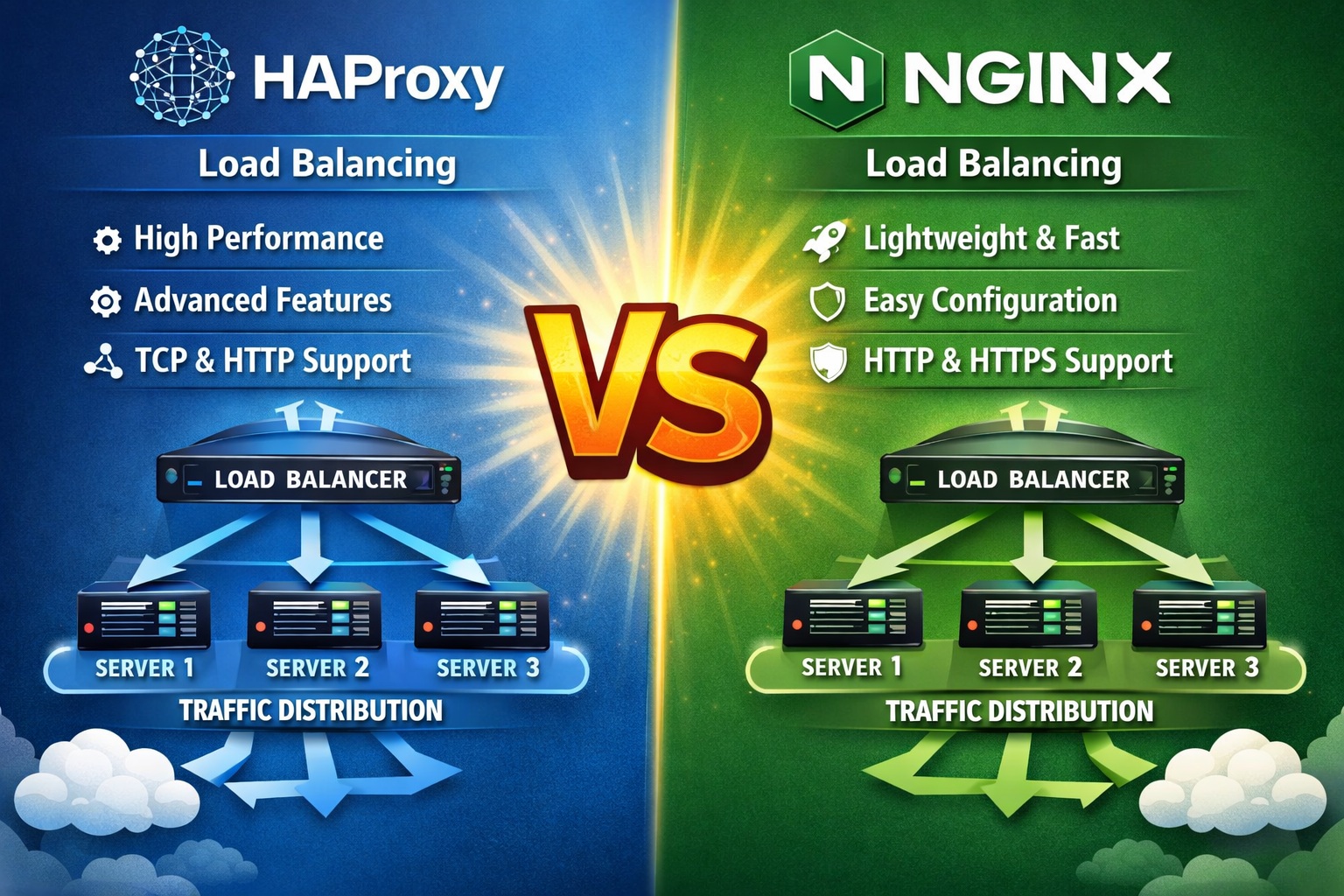

Tools: HAProxy vs. NGINX

HAProxy is a purpose-built load balancer. Its configuration is logical, and its ACL language enables sophisticated routing rules. It excels at L4 balancing and offers advanced algorithms like consistent hashing. When raw performance and flexibility are paramount, HAProxy is my top choice.

NGINX is a universal tool—it shines as a web server, cache, and reverse proxy. Its L7 capabilities are extensive, but L4 balancing requires the stream module. Its hierarchical config is familiar to many, but for pure proxying, HAProxy is often more efficient. The decision hinges on your priority: specialization or versatility.

Practice: Building a Fault-Tolerant Core

The HAProxy + Keepalived combo is my battle-tested bare metal template. Keepalived is configured with priorities and health-check scripts that monitor not just the network—but HAProxy itself. HAProxy, in turn, uses advanced checks—like sending protocol-specific commands to validate PostgreSQL health. Kernel parameters like net.ipv4.ip_nonlocal_bind=1 are essential for VIP functionality.

In Kubernetes, I prioritize proper graceful shutdown and liveness/readiness probes. Without them, even the best Ingress controller can drop connections during rollouts. For external traffic on bare metal, MetalLB in BGP mode or Cilium with eBPF is preferred over ARP mode—they distribute load across all cluster nodes, not just a single “leader.”

Conclusion

There’s no one-size-fits-all solution. For stateful services like PostgreSQL on physical servers, HAProxy + Keepalived remains the gold standard. For microservice HTTP apps in Kubernetes, I choose the HAProxy Ingress Controller for its performance and L4 support via CRDs. My guiding principle: resilience must be designed in—not bolted on. Your choice should be driven not by a tool’s popularity, but by the specific engineering problem, availability requirements, and your team’s expertise.

← Back to Portfolio